By Rui Wang, CTO of AgentWeb

Robotaxis: The Ultimate AI Stress Test Coming in 2026—Are We Ready?

Have you ever found yourself stuck in traffic, glancing at the car next to you and wondering: “Will a robot ever drive that car better than a human?” If you’re like me, the answer isn’t just about tech—it's about trust. And as 2026 approaches, the world is gearing up for what I believe is the most revealing, make-or-break test for artificial intelligence: robotaxis.

Let’s cut to the chase. If you want the short version—robotaxis will force AI to prove itself in the real world, not just in code or controlled demos. They’ll expose the true limits of machine autonomy, shape how we regulate agentic AI, and define public trust for every startup working with smart software. So, whether you’re building the next killer app or just curious about what’s next, buckle up. This isn’t just about self-driving cars. It’s about the future of how we interact with intelligent machines, everywhere.

TL;DR: Why Robotaxis Matter in 2026

- Robotaxis will be the public’s first daily contact with fully autonomous, agentic AI systems.

- Their performance will set the bar for AI trust, regulation, and what’s considered “safe enough” for deployment.

- UK robotaxi trials, covered by Financial Times, are leading the charge in real-world stress testing.

- If robotaxis succeed, agentic AI adoption will accelerate across industries.

- If they fail—expect a regulatory clampdown and shaken public faith in AI.

Ready to dig in? Let’s talk about why robotaxis are the real stress test for AI, and why the runway to 2026 will redefine what “trustworthy AI” actually means.

The Road to 2026: Why Robotaxis Are More Than Just Smart Cars

From Hype to Reality: The AI Stress Test We Can’t Fake

I’ve watched AI hype cycles for years as both a startup founder and CTO. There’s always talk—demos, press releases, and plenty of “this changes everything” moments. But most AI breakthroughs happen behind screens, in datasets, or in simulated environments. Rarely do they spill onto the street in ways that force everyone—government, industry, and everyday people—to reevaluate what’s possible.

Robotaxis are different. They’re agentic AI in the wild—machines making real decisions in unpredictable environments. No controlled tests. No human-in-the-loop safeguards. Just a software brain powering a vehicle among cyclists, kids, buses, you name it. And everyone gets a front-row seat.

What Makes Agentic AI Unique?

Agentic AI isn’t just about automation. It’s about systems that take initiative, make complex decisions, and interact with humans on their own terms. Robotaxis are the poster child for agentic AI because:

- They must interpret ambiguous visual and auditory inputs, just like humans.

- They handle edge cases—unexpected obstacles, unclear road signs, bizarre human behaviors.

- They manage risk, liability, and ethical dilemmas in real time.

- Their decisions have direct, potentially life-or-death consequences.

If you want to know whether AI is ready to operate in the real world, robotaxis are the highest-stakes test available.

Why 2026 Is the Critical Year

Here’s what I’ve learned from tracking industry roadmaps and regulatory timelines:

- The UK, US, China, and EU are aligning policies for large-scale robotaxi deployment by late 2025 or early 2026.

- Major automakers (Tesla, Waymo, Cruise, Baidu) are targeting full public service launches within this window.

- Insurance, liability, and safety frameworks are being rewritten right now, with final rules expected to crystallize over the next 18 months.

- Public trust will hinge on the first months of operation. One major incident could set progress back for years.

In short, 2026 isn’t just another year—it’s the moment when agentic AI either makes the leap to everyday life or hits a wall.

UK Robotaxi Trials: A Case Study in Trust and Regulation

What’s Happening in the UK?

If you haven’t seen it yet, the Financial Times recently covered the UK’s ambitious robotaxi trials. It’s a fascinating glimpse into how a country with strict safety standards, complex urban environments, and wary regulators is approaching the AI stress test.

Here’s what stands out:

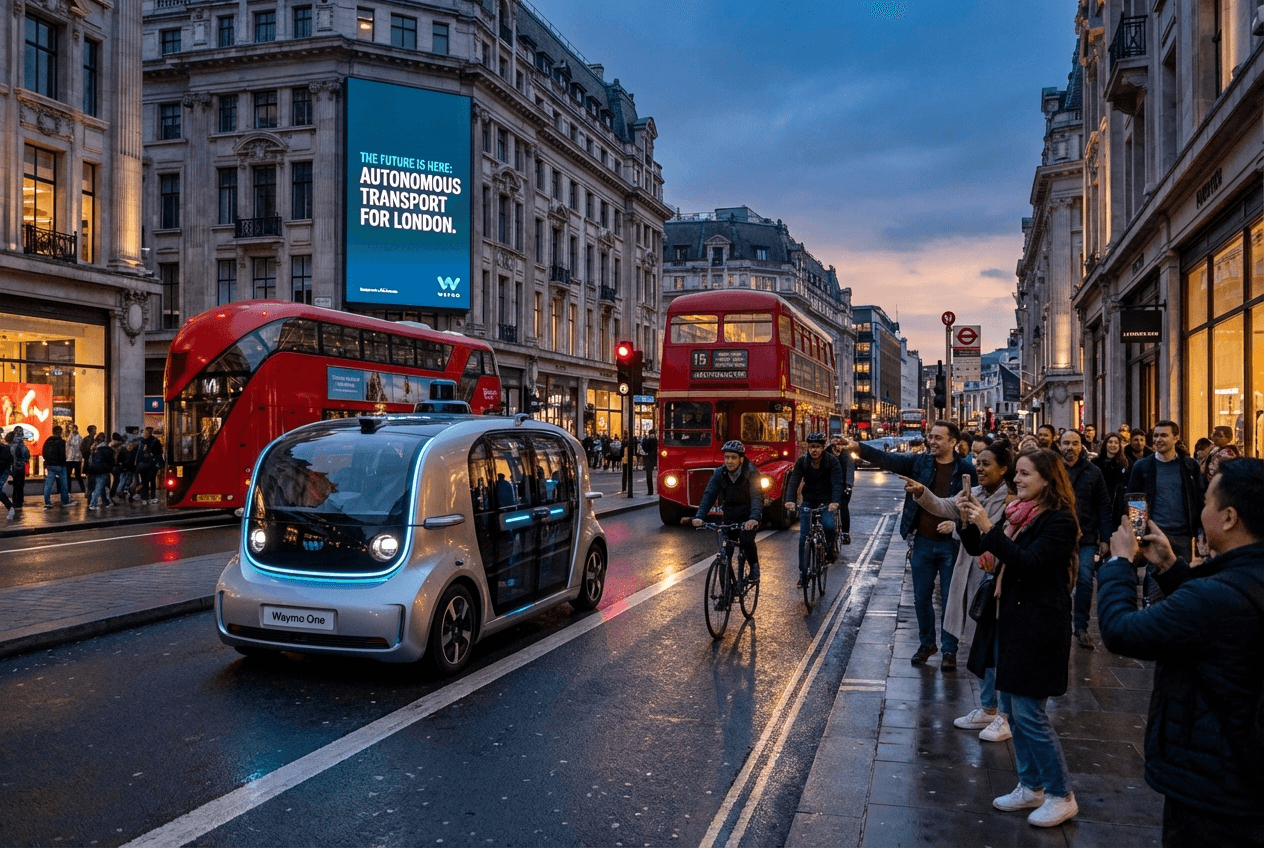

- Trials are happening in busy urban centers like London and Manchester, not just easy suburban routes.

- Vehicles must operate without safety drivers, interacting with unpredictable pedestrians and cyclists.

- Data is being collected not just on technical performance, but on public sentiment and regulatory gaps.

Real Learning in Real Streets

I recently visited one of these trial sites outside Canary Wharf. As I watched a robotaxi negotiate double-parked vans, jaywalkers, and buses weaving across lanes, I realized: there’s no substitute for real-world learning. Every weird maneuver, every split-second decision, every gesture from a confused pedestrian—it all becomes data for refining agentic AI.

UK’s Approach to Regulation: Pragmatic, But Firm

Unlike some markets that rush ahead, the UK is balancing innovation with caution:

- Strict licensing for trial operators and mandatory public liability insurance.

- Real-time data sharing with transport authorities for incident analysis.

- Ongoing consultation with consumer safety groups and local councils.

This isn’t just bureaucracy—it’s the scaffolding that could actually build public trust. If robotaxis can thrive under these constraints, it will be hard for naysayers to argue AI isn’t ready.

Implications for Startups and Tech Founders

If you’re building agentic systems—whether in mobility, healthcare, logistics, or finance—watch these UK trials closely. They’re a masterclass in:

- Navigating regulatory sandboxes.

- Proving safety in messy, unpredictable conditions.

- Building trust with users who didn’t opt in to your product.

We talk a lot about “permissionless innovation” in tech, but the UK trials show that real breakthroughs happen when you earn public permission through transparency and accountability.

Why Robotaxis Are the Perfect AI Stress Test

The High-Stakes Mix: Safety, Ethics, and Scale

Let me share something from my own experience launching agentic web platforms: the hardest part isn’t the tech. It’s managing risk, expectations, and edge cases at scale. Robotaxis face all of these—plus the challenge of literally putting lives in their “hands.”

Consider what makes the robotaxi problem unique:

- Safety: One crash can be global news. Every mile is a test of the AI stack’s resilience.

- Ethics: Who gets priority in a tight spot—a pedestrian, a cyclist, a car full of passengers?

- Scale: You’re not running a dozen test vehicles. You’re deploying thousands, each with its own context.

- Unpredictability: Unlike warehouses or factories, roads are chaotic and shaped by human behavior.

- Regulation: Standards aren’t just legal—they’re public expectations. Fail them, and regulators will pounce.

No More Controlled Environments

For years, AI has been tested in environments where variables are tightly managed—a factory floor, a Google data center, a video game. Robotaxis throw all that out the window. If the weather shifts, if a dog runs into the street, if road lines fade, the system must adapt instantly. There’s no “pause and retrain.”

This is the ultimate stress test because:

- Systems must generalize—not just memorize training data.

- Failure isn’t just a bug—it’s potentially catastrophic.

- The feedback loop is immediate and unforgiving.

If you’re wondering whether AI is ready for primetime, robotaxis will give you the answer—warts and all.

The Agentic Dilemma: Autonomy vs. Control

Here’s where things get really interesting. Unlike earlier autonomous vehicles, robotaxis operate with high agency. They don’t just follow preset rules—they make context-sensitive decisions, sometimes in direct tension with human judgment.

As founders, we face a dilemma: how much autonomy do we grant our agents before human oversight is required?

Robotaxis will force this debate into the open. Their decisions will set precedents for every agentic system that follows.

Lessons from Early Trials: What’s Actually Working

Data from Real Deployments

We’ve seen robotaxi pilots in San Francisco, Beijing, and now the UK. What’s working? What’s failing?

Success Indicators:

- Low accident rates in controlled rollout phases, especially in China and California.

- Quick adaptation to new urban layouts—AI stacks that retrain on-the-fly without human intervention.

- Positive public sentiment after transparent reporting of incidents and near-misses.

Pain Points:

- Edge cases like emergency vehicles, construction zones, and aggressive cyclists still challenge the best systems.

- Weather variability (rain, fog, snow) degrades sensor accuracy and decision-making.

- Insurance and liability questions remain unresolved, especially for cross-border operations.

What UK Trials Reveal About Trust

One thing I’ve noticed: UK operators spend as much effort on public education as technical deployment. They run open days, publish incident logs, and even let skeptics ride along. This proactive communication is building a foundation for agentic AI trust that goes far beyond the technology.

If you want your AI startup to succeed, take notes. Transparency isn’t just nice to have—it’s essential. When your product starts impacting people who didn’t choose it, you need to bring them into the conversation.

The Regulation Race: Who Sets the Rules for Agentic AI?

UK vs. US vs. China: A Tale of Three Approaches

Regulation is where the rubber meets the road (pun intended). Here’s how major regions are approaching the robotaxi challenge:

UK

- Collaborative sandbox trials with strong oversight.

- Mandatory insurance and public reporting.

- Emphasis on consent and public engagement before scaling.

US

- Patchwork of state-level rules, with California leading on technical benchmarks.

- Faster commercial rollout, but more public controversy after incidents.

- Ongoing debate about federal vs. state jurisdiction.

China

- Centralized, top-down regulations with rapid scaling in select cities (Beijing, Shenzhen).

- Strong government backing, but less transparency on safety incidents.

- High investment in domestic AI chipsets and sensor stacks.

Why Regulation Is a Trust Signal

I’ve heard founders complain about regulation slowing down innovation. But here’s the thing: in agentic AI, regulation is a trust signal. When authorities set clear standards and enforce them, users feel safer adopting new tech. The UK’s public-facing approach may be slower, but it’s winning hearts and minds.

The Startup Opportunity: Navigating the Maze

If you’re an AI founder, regulation can be your moat—not your enemy. Here’s how:

- Participate in sandbox trials to refine your product with real feedback.

- Build compliance into your stack early—don’t bolt it on later.

- Use regulatory achievements as marketing (“Certified for Urban Safety by Transport UK”).

Robotaxi trials are proving that founders who embrace regulation as part of their process build more resilient, trusted products. In 2026 and beyond, that’s a competitive advantage you can’t afford to ignore.

Real-World Deployment: Beyond the Pilot Phase

The True Test: Scaling Up Without Meltdowns

The leap from pilot to full-scale deployment is where most AI projects stumble. I’ve been through this myself—what works in a controlled rollout often breaks down when real-world complexity explodes.

For robotaxis, the key challenges are:

- Maintaining reliability when thousands of agents operate simultaneously.

- Ensuring quick response to unexpected system-wide failures (sensor outages, software bugs).

- Managing public relations after inevitable incidents.

What Startups Can Learn

Here’s my advice, drawn from both robotaxi case studies and my own startup journey:

- Design for failure. Assume things will go wrong. Build incident response into your AI architecture.

- Invest in monitoring. Real-time dashboards and alerting aren’t optional—they’re your lifeline.

- Prioritize communication. “Black box” AI is dead. Your users want to know what happened (and why).

- Leverage local partnerships. City agencies, insurers, and advocacy groups can help you weather crises.

The robotaxi rollout is a blueprint for anyone serious about agentic AI in the wild. The startups that survive will be those that treat deployment as a living process—not just a technical launch.

Public Trust: The Make-or-Break Factor

Why Trust Is Harder Than Technology

Here’s the uncomfortable truth: the technology behind robotaxis is already incredibly advanced. What’s lagging is trust. If you build perfect tech but lose public confidence, you lose everything.

UK trials are showing that trust is earned through:

- Consistent safety records.

- Transparent incident reporting.

- Active community engagement (rider feedback, open forums).

- Clear accountability for mistakes.

The Agentic AI Image Problem

Agentic AI doesn’t “look” or “feel” like a human. It’s a software-driven entity making decisions on its own. For many, that’s unsettling. Robotaxis are forcing regulators, companies, and users to confront what it means to let machines make decisions on our behalf.

I’ve spoken to skeptics who say, “I’ll never get in a driverless car.” Yet, after seeing transparent trials and riding along, some change their mind. The lesson here is clear: trust is built by showing—not telling.

What Comes After Robotaxis?

The Ripple Effect Across Industries

If robotaxis pass the stress test in 2026, the implications are massive. Every sector—from logistics to healthcare to finance—will see a green light for agentic AI deployment. Imagine:

- Autonomous delivery fleets that navigate cities solo.

- Mobility-as-a-service platforms with no drivers.

- Automated customer service agents that handle sensitive transactions.

- AI-powered medical diagnostics that make real-time decisions.

All of these rely on the same core principles: robust decision-making, transparent accountability, and resilient performance under stress.

If Robotaxis Fail: The Cautionary Tale

But what if public incidents shake confidence? A high-profile crash, a hacking scare, a regulatory freeze? That’s not just bad for robotaxi startups—it’s a chilling effect for all agentic AI markets.

Here’s what I’m watching for:

- Regulatory backlash. More rules, slower approvals, and higher insurance costs.

- Investor hesitation. Funding dries up for riskier agentic projects.

- User skepticism. Adoption rates flatline and competitors win by offering “human-in-the-loop” services.

The next few years aren’t just about robotaxis—they’re about setting the expectations for all AI agents that follow them.

Actionable Takeaways for Startup Founders

I promised actionable insights, so here’s my checklist for anyone building agentic AI as we approach 2026:

- Focus on safety first. Don't just meet minimum benchmarks—exceed them. Invest in safety assurance.

- Build transparency into your product. Make incident logs, decision rationales, and user feedback visible and accessible.

- Engage with regulators early. Be proactive, not reactive. Contribute to shaping the rules.

- Invest in public education. Host demos, publish explainers, and invite skeptics to test your technology.

- Treat deployment as an ongoing process. Rapid iteration and monitoring are more important than ever.

- Use UK trial lessons. Leverage insights from regulatory sandboxes and public trust campaigns.

Your success—or failure—will be shaped by how you handle this AI stress test, just as much as your technical chops.

My Final Thoughts: Embracing the Robotaxi Moment

As a CTO, I’ve spent years thinking about how to transition AI from lab to street. The robotaxi rollout is the most visible, consequential experiment in agentic AI we’ve seen. It’s a stress test not just for technology, but for our capacity to trust, regulate, and deploy systems that act on our behalf.

If you’re building in this space, 2026 is your moment. Whether you’re coding the next mobility agent, building the compliance stack, or educating the public, your work will shape how society views intelligent machines for the next decade.

So let’s get ready for the real test. Learn from UK trials. Embrace transparency. Build for resilience. And keep asking: what will it take for people to let go of the wheel—and trust the agent in the driver’s seat?

I’ll be watching, riding, and reporting. Will you?

Book a call with Harsha if you would like to work with AgentWeb.

.png)