By Rui Wang, Ph.D., CTO at AgentWeb

The moment Claude Opus 4.6 dropped, our system told on us.

Latency profiles changed. Token throughput curves shifted. A handful of agent workflows that had been stable the day before started behaving slightly differently. Nothing was broken—but it was a clear signal: a new model release had real production implications.

That's the part most people miss. Supporting a new foundation model isn't about flipping a config flag. It's about whether your system can absorb change without leaking complexity to customers.

Within a few hours of Claude Opus 4.6 being released, AgentWeb fully supported it in production.

This post explains what that actually took—and why it matters for our customers.

The Failure Mode We Design For

Every major model release introduces three predictable risks:

Behavioral drift – The same prompt can trigger different reasoning paths. A research agent that previously gathered five sources might now gather seven, or prioritize different types of information. For marketing workflows, this affects content tone, ad copy variations, and campaign strategy recommendations.

Latency variance – New models often have different performance characteristics, especially under concurrent agent execution. When you're running dozens of agents simultaneously—some doing research, others generating content, others analyzing performance data—latency changes compound quickly.

Tool-calling edge cases – Subtle schema or ordering differences in how models invoke functions can break multi-step workflows. An agent might call the right tools in a different sequence, or structure parameters slightly differently, causing downstream failures.

If your product is a demo, you can ignore these.

If your product runs customer campaigns automatically, you can't.

At AgentWeb, our agents orchestrate research, content generation, ad creation, deployment, and measurement. A small change in how a model reasons or calls tools can cascade across the entire system. One broken link in a ten-step workflow means a failed campaign launch.

So our question was simple: Can we onboard Claude Opus 4.6 without forcing customers to think about any of this?

What Actually Happened After Release

Anthropic announced the release of Claude Opus 4.6 early that morning on X (Twitter). This was the external signal that kicked off our production readiness checks:

Within hours, we had:

- Validated agent planning consistency against existing workloads

- Re-run high-risk workflows (multi-step research → generation → execution)

- Applied guardrails where Opus 4.6 behaved correctly, but differently

No marketing announcement first. No blog post first.

Production confidence first.

The validation process wasn't just about running smoke tests. We replayed actual customer workflows from the previous week—campaigns that had successfully launched, content that had performed well, research agents that had delivered accurate insights. We compared how Opus 4.6 handled these same tasks against our baseline expectations.

In some cases, Opus 4.6 was objectively better. Research agents found more relevant sources faster. Content generation showed improved coherence in long-form pieces. Tool calling was more reliable in complex, nested scenarios.

But "better" still requires validation. A research agent that now pulls from academic sources when it previously favored industry blogs isn't wrong—but it changes the output profile. Our customers rely on consistent, predictable results. So even improvements need guardrails.

The Guardrails That Made This Fast

Speed wasn't the result of heroics. It was architecture.

Three design decisions made rapid model integration possible:

Model abstraction layers – Our agents don't bind directly to vendor APIs. They interact with an internal model interface that handles provider-specific quirks, rate limiting, error handling, and fallback logic. When Claude Opus 4.6 became available, we added it to the abstraction layer without touching agent code.

This separation matters because agent logic should be stable. The reasoning patterns, tool selection strategies, and workflow orchestration shouldn't change just because a model vendor updates their API. By keeping model interactions behind an abstraction, we can swap providers, versions, or even run A/B tests without rewriting agent logic.

Deterministic checkpoints – We don't just compare final outputs. We compare agent plans at each decision point. Before an agent executes a tool call, we log what it intended to do and why. This creates a trace of reasoning that we can replay and compare across model versions.

When Opus 4.6 showed different behavior, we could pinpoint exactly where the divergence occurred. Was it during initial planning? Tool selection? Parameter formatting? This granularity made debugging fast and precise.

Fail-soft routing – New models shadow traffic before full exposure. When we first integrated Opus 4.6, it handled a small percentage of production requests in parallel with our stable models. We compared outputs, logged differences, and only increased traffic as confidence grew.

This approach catches edge cases that synthetic tests miss. Real customer workflows have complexity that's hard to replicate in staging environments—unusual prompt patterns, unexpected tool call sequences, edge cases in data formats. Shadow traffic exposes these issues without risking customer experience.

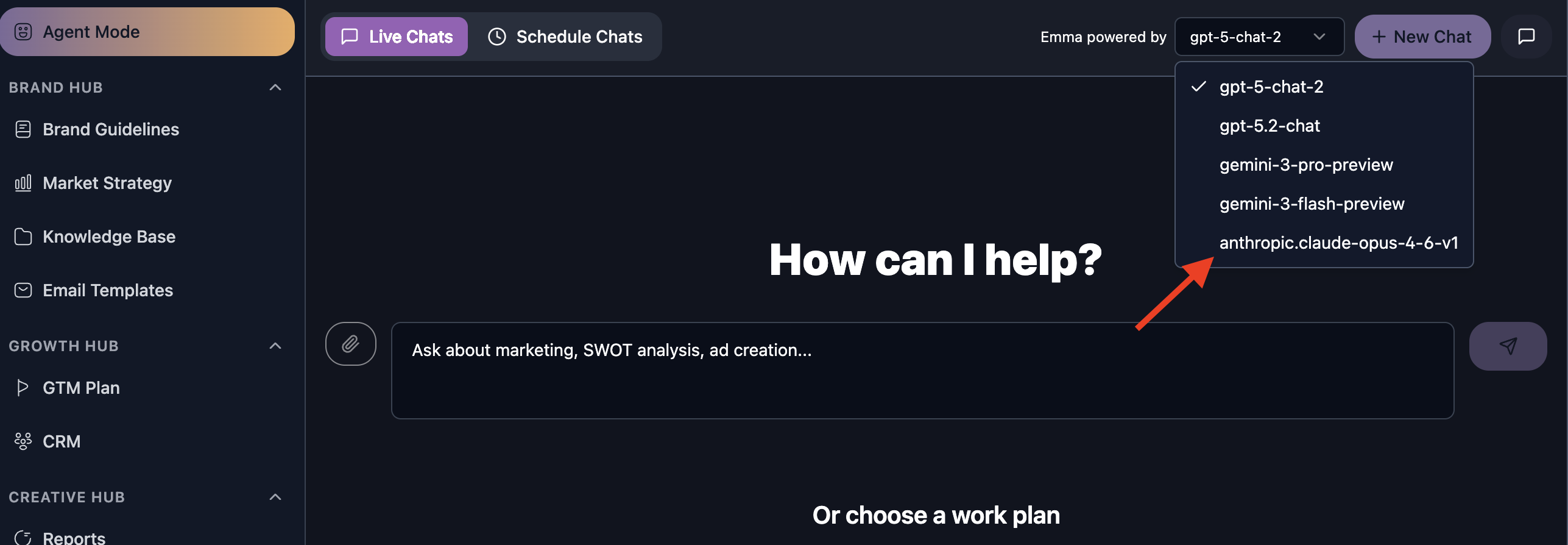

A few hours later, Claude Opus 4.6 was already runnable inside AgentWeb’s Agent Mode (Emma), fully integrated into live workflows:

This is what lets us move in hours, not weeks.

Why This Matters for Customers

Most AgentWeb customers never think about Claude Opus 4.6—and that's the point.

They just see:

- Faster research agents that find relevant information in less time

- More reliable long-form generation with better coherence across sections

- Better reasoning in complex marketing workflows, especially multi-channel campaigns

- Improved tool-calling reliability when agents need to coordinate across multiple data sources

Our job is to translate frontier model progress into immediate, safe gains for startups and SMBs—without asking them to become AI infrastructure experts.

Consider what the alternative looks like. Many AI platforms require customers to manually select models, understand the tradeoffs, adjust prompts for each version, and monitor for behavioral changes. That's fine for AI researchers or large enterprises with dedicated ML teams.

But a startup founder launching a new product doesn't have time to become an expert in foundation model differences. They need their marketing agents to work consistently and improve automatically as better models become available.

That's the promise we deliver. When Claude releases a better model, our customers benefit immediately—without configuration changes, without migration guides, without thinking about it at all.

Enterprise-grade marketing science should not require enterprise-grade AI teams.

The Operational Takeaway

If your platform can't support a top-tier model within hours of release, the bottleneck usually isn't engineering speed.

It's missing guardrails.

The actual code changes to integrate Opus 4.6 were minimal—maybe a few hundred lines across our model abstraction layer. The real work happened months ago when we built the infrastructure to make rapid integration safe and reliable.

This includes:

- Comprehensive logging at every agent decision point

- Automated comparison frameworks for cross-model validation

- Traffic routing systems that support gradual rollouts

- Monitoring dashboards that surface behavioral changes immediately

- Rollback mechanisms that can revert to stable models within seconds

At AgentWeb, we design for change as the steady state. Claude Opus 4.6 was just the latest proof that the system behaves the way we expect when the ground shifts.

The AI landscape moves fast. Anthropic, OpenAI, Google, and others release new models regularly. Each release brings improvements—better reasoning, faster inference, more reliable tool use. But each release also brings risk.

Platforms that can't absorb these changes quickly force a choice on customers: stay on older models and miss improvements, or accept disruption with each upgrade. Neither option is acceptable for production systems.

We chose a third path: build infrastructure that makes model upgrades invisible to customers while maintaining the safety and reliability they depend on.

More models will come. Faster releases will follow.

Our commitment is simple: when the best tools become available, our customers get them—without friction, without downtime, without thinking about it.

That's what infrastructure should do. It should get out of the way and let you focus on building your business, not managing AI complexity.

.png)