The Constraint Has Moved Down the Stack

The AI race isn't about who builds the smartest model anymore. It's about who can actually manufacture, deploy, and scale the infrastructure that powers those models.

According to recent analysis on ASML and Nvidia, we're witnessing a fundamental shift in where competitive advantage lives in the AI ecosystem. While headlines obsess over the latest LLM benchmarks and training runs, the real bottleneck has quietly moved to something far less glamorous: chip manufacturing capacity, power distribution, and systems integration.

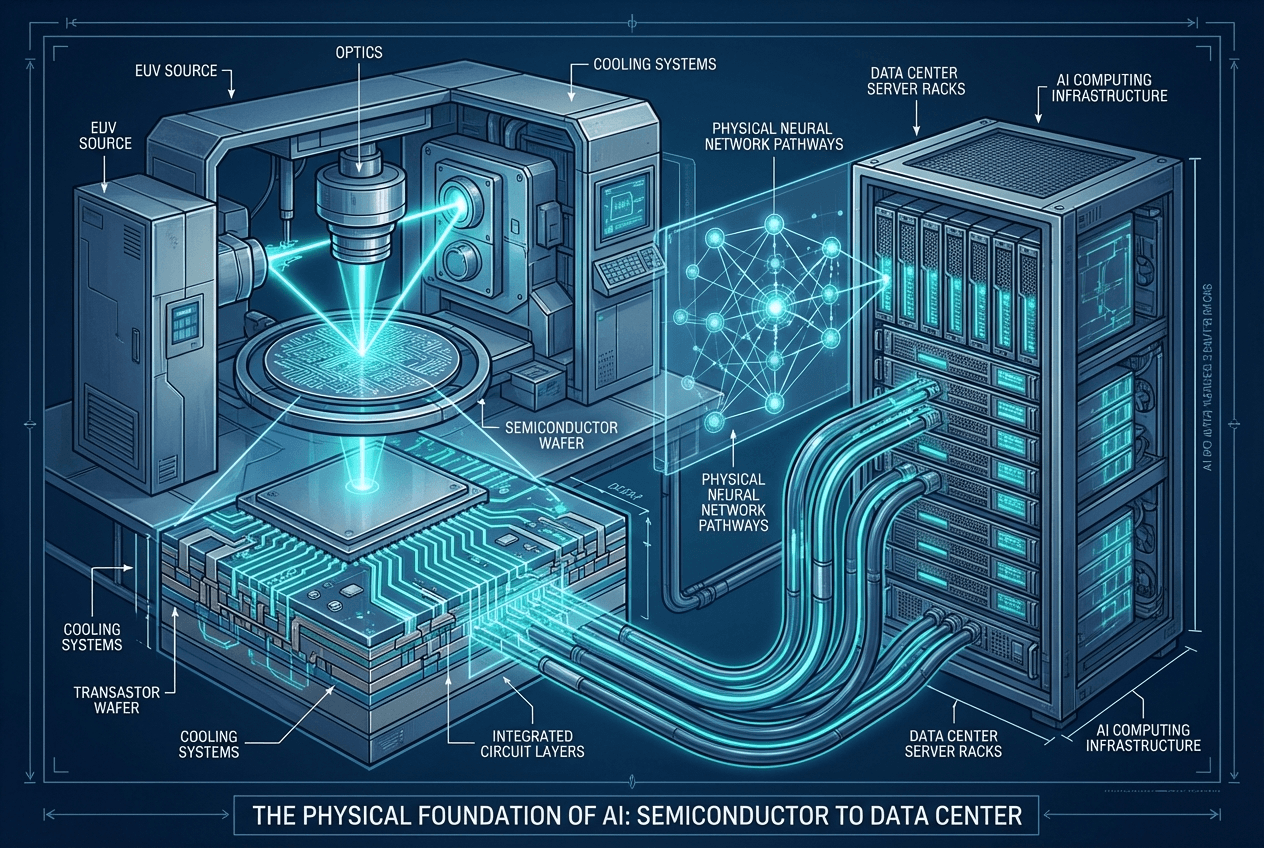

ASML, the Dutch company behind extreme ultraviolet (EUV) lithography machines, illustrates this shift perfectly. They produce the only machines in the world capable of printing the advanced chips that power modern AI systems. No ASML, no cutting-edge chips. No chips, no AI at scale. It's that simple.

This isn't just a supply chain story. It's a fundamental reordering of where leverage exists in the AI stack—and it has direct implications for how founders should think about building competitive advantage.

Why Infrastructure Became the New Frontier

For the past several years, AI progress was synonymous with model architecture breakthroughs. Transformers. Attention mechanisms. Scaling laws. Each advance pushed the frontier of what was possible, and the companies with the best research teams dominated the conversation.

But something changed around 2023-2024. Models became "good enough" for a massive range of practical applications. GPT-4 level capabilities aren't the constraint anymore for most real-world use cases. The constraint is whether you can actually run these models reliably, efficiently, and at scale.

This shift manifests in several ways:

Manufacturing Capacity: Building advanced AI chips requires EUV lithography machines that cost $200+ million each, take years to manufacture, and can only be produced by a single company. ASML shipped just over 100 of these machines in 2023. That's the bottleneck for the entire global AI infrastructure buildout.

Power and Cooling: Modern AI data centers require power infrastructure that rivals small cities. A single H100 GPU cluster can draw multiple megawatts. The limiting factor for many AI deployments isn't compute availability—it's whether the local power grid can support the load.

System Integration: Running AI at scale isn't about dropping GPUs into racks. It's about networking, storage, memory bandwidth, software stacks, and orchestration layers that work together seamlessly. This is systems engineering, not computer science research.

Economic Efficiency: At production scale, inference costs dominate. A model that's 10% better but requires 2x the compute loses. Optimization at every layer of the stack—from silicon design to model quantization to serving infrastructure—determines who can actually afford to run AI profitably.

This is why Nvidia's market position is so dominant, and why their relationship with ASML is so critical. Nvidia doesn't just sell GPUs—they sell an entire ecosystem of hardware, software, networking, and tooling that works together. That integration is nearly impossible to replicate, even for companies with comparable chip designs.

The New Competitive Landscape

This infrastructure-first reality creates a different competitive landscape than most founders expect.

Vertical Integration Matters Again: The companies winning in AI aren't just building software or just building chips. They're building full-stack solutions where hardware and software are co-designed. Apple's approach with their M-series chips and on-device AI capabilities exemplifies this. So does Tesla's Dojo supercomputer, custom-built for their specific training workloads.

Infrastructure Awareness Becomes Product Strategy: Products that assume infinite, cheap compute will lose to products designed around infrastructure constraints. This means thinking about model size, inference costs, latency requirements, and deployment topology from day one—not as an afterthought.

Orchestration Over Intelligence: A system that reliably coordinates multiple specialized models often outperforms a single "smarter" model. This is why agentic architectures are gaining traction. The value isn't in having one super-intelligent model—it's in orchestrating multiple models, tools, and workflows efficiently.

Distribution and Access Trump Raw Capability: If you can't access the infrastructure to run cutting-edge models, their capabilities are theoretical. This is why API access, inference partnerships, and edge deployment strategies matter as much as model performance.

What This Means for Startup Founders

If you're building in the AI space right now, this shift has concrete implications for your strategy:

1. Design for Real-World Constraints: Don't build assuming you'll have unlimited access to the latest GPUs. Design your product around what infrastructure you can reliably access and afford. This might mean smaller, specialized models instead of frontier models. It might mean edge deployment instead of cloud-only. It might mean clever caching and batching instead of real-time inference for everything.

2. Think in Systems, Not Features: Your competitive moat likely isn't a better model—it's better integration. How well does your system handle failures? How efficiently does it batch requests? How intelligently does it route between different model sizes and providers? These systems-level decisions create defensibility that pure model performance doesn't.

3. Build for Orchestration and Reliability: The future of AI products isn't standalone tools—it's systems that coordinate multiple capabilities reliably. An agentic system that consistently executes a workflow end-to-end beats a more "intelligent" system that fails 10% of the time. Reliability and orchestration are product features, not infrastructure concerns.

4. Watch the Infrastructure Layer Closely: Understanding the constraints and opportunities in the infrastructure layer helps you make better product decisions. When will new chip generations arrive? What are the real costs of different deployment options? Where are the bottlenecks in the current ecosystem? These aren't just technical details—they're strategic intelligence.

5. Optimize for Total Cost of Ownership: A model that costs 2x less to run but performs 5% worse often wins in production. Think about the full economic picture: inference costs, development costs, maintenance burden, infrastructure complexity. The cheapest solution to build isn't always the cheapest solution to operate.

How We Apply This at AgentWeb

At AgentWeb, this infrastructure-first mindset shapes everything we build. Our agentic marketing systems aren't designed to showcase the latest model capabilities—they're designed to reliably execute complex marketing workflows at scale.

This means:

- Multi-model orchestration that routes tasks to the most cost-effective model that can handle them, not always the most capable

- Infrastructure-aware scheduling that batches work efficiently and handles rate limits and failures gracefully

- Reliability-first architecture where consistent execution matters more than occasional brilliance

- Cost optimization at every layer, from prompt engineering to model selection to caching strategies

We're not trying to build the smartest AI marketing assistant. We're building the most reliable system for actually getting marketing work done—which turns out to be a much harder and more valuable problem.

The Boring Work Is Where Leverage Lives

The next wave of AI winners won't be determined by who trains the biggest model or publishes the most impressive benchmark results. It will be determined by who masters the unglamorous work of infrastructure, integration, and execution at scale.

This is actually good news for founders. The infrastructure constraint means that success isn't just about having the best research team or the most compute budget. It's about making smart systems-level decisions, understanding real-world constraints, and building products that work reliably within those constraints.

The companies that figure out how to build AI products that are not just capable, but actually deployable, affordable, and reliable—those are the companies that will capture value over the next decade.

ASML and Nvidia understand this. They're not competing on who has the best technology in isolation—they're competing on who enables an entire ecosystem to function. That's where real leverage lives in the AI stack.

For founders, the lesson is clear: stop obsessing over model capabilities and start obsessing over systems integration. The infrastructure layer isn't a distraction from your product—it is your product strategy.

Rui Wang, Ph.D. is CTO of AgentWeb, where he builds agentic marketing systems focused on reliability and execution at scale.

.png)